According to OpenAI, its new model hallucinates less and is more accurate than GPT-5.1.

When OpenAI announced GPT-5.2 this December, the announcement was quiet, almost matter of fact. That’s exactly the clue to what this release really is. No hype, but a maturity signal. GPT-5.2 is being positioned as a model meant for professional knowledge work and sustained, agentic workflows.

Fidji Simo, OpenAI’s CEO of Applications, said something simple that carries more weight than you might realize:

“We designed GPT-5.2 to unlock even more economic value for people.”

It’s a clear indication of the audience OpenAI is targeting: builders, analysts, coders, researchers, and operators.

A Context Window Worth Paying Attention To

GPT-5.2 doesn’t dramatically expand the token window over GPT-5.1, but it uses that context much more effectively. Long-running workflows such as research summaries, large codebases, and multi-document synthesis have historically been where large language models struggle.

GPT-5.2’s ability to retain consistency in context is one of the first times we’ve seen a generative model handle this reliably at scale. This matters if you intend to work with:

- Entire product specifications

- Multi-chapter documents

- Large code repositories

- Complex academic literature

Models that lose context in long tasks are useless for serious work. GPT-5.2 closes that gap.

Three GPT-5.2 Models for Different Workloads

OpenAI released three models this time.

GPT-5.2 Instant, Thinking, and Pro Models

OpenAI is moving away from one-size-fits-all AI models. They understand that context and depth matter more than raw chatter. Professional and agentic work systems require very different behavior than casual chat.

Competition Made This Shift Real

It’s hard to understand GPT-5.2 without seeing the competitive backdrop.

Sam Altman publicly acknowledged that OpenAI entered multiple “code red” modes this year. These internal pushes were designed to address competitive gaps, especially after Google’s Gemini 3 and other rivals made real technical gains.

The result is innovation driven by pressure, and that pressure is coming from real enterprise demands.

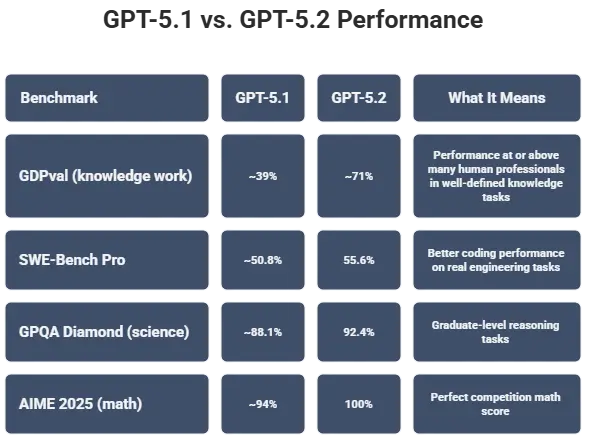

Benchmarks That Actually Move the Needle

Benchmarks tell only part of the story. They show that GPT-5.2 performs better at math, coding, and reasoning tests. But these metrics only matter if the model behaves consistently in real workflows.

From early public reports and community feedback, several practical patterns are emerging:

- GPT-5.2 generates longer documents with fewer hallucinations and corrections

- Coding results are more dependable for refactors and migrations

- Vision and multimodal reasoning feel less brittle when interpreting diagrams or charts

- The model holds up in multi-step tasks that require integrated tool use

Some Reddit users report that safety filters and moderation layers feel restrictive, especially for experienced users.

Where GPT-5.2 Is Truly Different From Earlier Models

Early GPT models were assessed primarily on conversational ability. GPT-5.2 is being evaluated on:

- Workflow reliability

- Task completeness

- Multi-step reasoning

- Professional output quality

This marks a shift in how large language models are judged. What matters is not how clever the response sounds, but what the system produces over time. That’s why most enterprise analysis of GPT-5.2 focuses less on features and more on reliability and context retention.

What GPT-5.2 Means for Leaders and Teams

For organizations investing in AI:

- GPT-5.2 may become the default model for internal assistants

- It may replace portions of research and synthesis workflows

- It may reduce dependency on external toolchains

- It may augment technical reasoning work previously handled only by expert humans

Unlike earlier releases, the question “Can we use this in production?” is increasingly being answered with yes.

Conclusion

GPT-5.2 represents a shift from conversational AI toward dependable, agentic knowledge work. With stronger context retention, improved reliability, and models tuned for real workloads, it is built for professionals, not demos. For teams evaluating production-ready AI, GPT-5.2 answers the reliability question more clearly than past releases. This release signals how OpenAI now defines progress: sustained output over time.

What is GPT-5.2 designed for?

GPT-5.2 is designed for professional knowledge work and agentic workflows that require consistency over long tasks.

How is GPT-5.2 different from GPT-5.1?

GPT-5.2 improves context retention, reduces hallucinations, and performs more reliably in multi-step workflows.

What are the GPT-5.2 model variants?

OpenAI released Instant, Thinking, and Pro models to support different depths of reasoning and workload complexity.

Why does context retention matter for agentic workflows?

Long-running tasks like research, coding, and synthesis fail when models lose context, making reliability essential.

Can GPT-5.2 be used in production environments?

Early feedback suggests GPT-5.2 is increasingly suitable for production use due to improved stability and output quality.