As we are writing this, ChatGPT is the world’s 4th most-visited website, with over 700 million weekly active users. It’s become the global low-hanging fruit for anything and everything: from writing emails, event planning, coding to entire digital marketing campaigns and questions that could’ve been Googled.

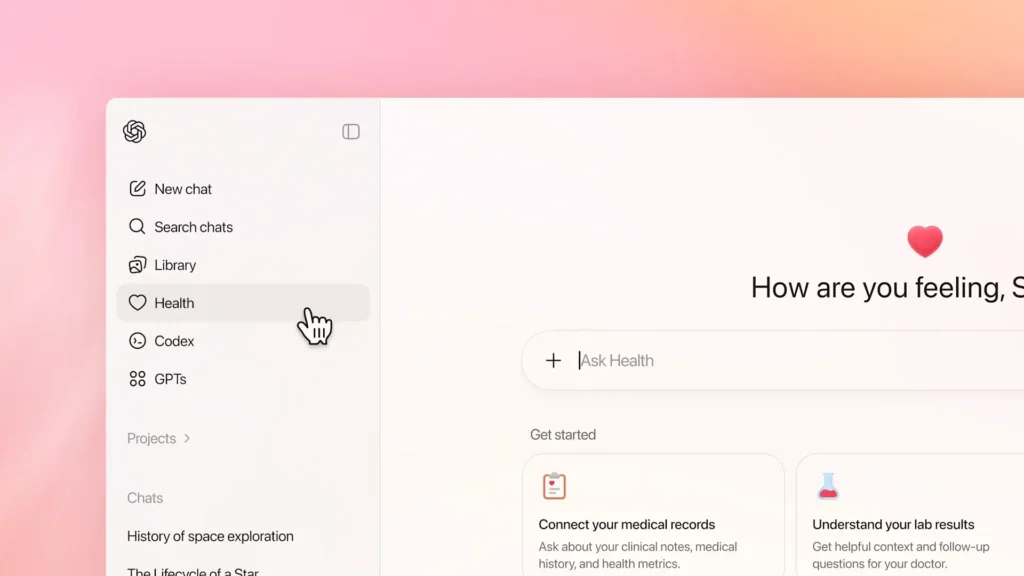

And now with OpenAI’s latest launch of ChatGPT Health, which is a sandboxed space inside ChatGPT for health-related questions. It’s being positioned as a “healthcare ally.”

It’s OpenAI’s clearest move yet into one of the most sensitive domains it touches: personal health.

What ChatGPT Health actually is?

ChatGPT Health is a separate tab inside ChatGPT with its own chat history and memory. Health conversations are isolated from your regular chats.

Users are encouraged to connect personal data sources to get more grounded responses, including:

- Medical records (via b.well, which integrates with ~2.2 million healthcare providers)

- Apple Health (movement, sleep, activity)

- MyFitnessPal and Weight Watchers (nutrition)

- Peloton (fitness)

- Function (lab test insights)

OpenAI says this allows ChatGPT to help users:

- Understand lab results and visit summaries

- Prepare questions for doctor appointments

- Interpret fitness, diet, and activity patterns

- Compare insurance options based on usage patterns

Access is currently via a waitlist. Rollout will expand gradually and won’t be limited to paid users.

What it is not

OpenAI repeats this line often, and for good reason:

“ChatGPT Health is not intended for diagnosis or treatment.”

That disclaimer isn’t theoretical because there’s history here.

Physicians documented a case where a man was hospitalized for weeks after following ChatGPT’s advice to replace salt with sodium bromide, triggering an 18th-century medical condition. Google’s AI Overview also faced backlash for dangerous health guidance, from dietary misinformation to unsafe medical suggestions.

Source: The Guardian

OpenAI can’t control what users do after they close the chat. That’s the core risk.

Scale changes the stakes

According to OpenAI:

- 230+ million people ask ChatGPT health or wellness questions every week

- In underserved rural communities, users send ~600,000 healthcare-related messages weekly

- 70% of health conversations happen outside clinic hours

Over the last two years, OpenAI says it worked with 260+ physicians, who reviewed model outputs 600,000+ times across 30 medical focus areas.

This explains why OpenAI didn’t treat ChatGPT Health as an experiment. People were already using ChatGPT this way. The product formalizes that behavior.

Source: OpenAI blog

Mental health: acknowledged, carefully

OpenAI avoided highlighting mental health in its launch post. That omission stood out.

When asked directly, Fidji Simo (CEO of Applications at OpenAI) said:

“Mental health is certainly part of health in general… We are very focused on making sure that in situations of distress we respond accordingly and we direct toward health professionals.”

OpenAI says the system is tuned to be informative without being alarmist and to redirect users toward professionals during distress.

Whether that tuning works consistently is still an open question.

Privacy, security, and the legal reality

OpenAI emphasizes that ChatGPT Health operates with “enhanced privacy.”

What that means in practice:

- Health chats are separate from normal chats

- Conversations in ChatGPT Health are not used to train models by default

- Additional encryption layers are used (not end-to-end)

- Users are nudged into the Health tab if a health discussion starts elsewhere

But there are limits:

- OpenAI has had past data exposure incidents (notably March 2023)

- In cases of valid legal requests or emergencies, data can still be accessed

- HIPAA does not apply here

As Nate Gross, OpenAI’s Head of Health, put it:

“HIPAA governs clinical and professional healthcare settings. Consumer AI products don’t fall under it.”

This distinction matters more than most users realize.

ChatGPT Health is useful. It’s also unfinished and operating in a space where mistakes carry real consequences.

Used as a preparation tool, an explainer, or a second lens, it has value. Based on our research, one of the few usages could be asking ChatGPT to help brainstorm questions ahead of a doctor appointment. Augmenting your existing care this way is a good idea.

But used as authority, it can become a potential risk. The technology is moving faster than regulation and as always, the burden shifts to users to know where the line is. And that line isn’t always obvious.

Conclusion

ChatGPT Health formalizes how millions already use AI for health questions, but scale raises the stakes. As a preparation and understanding tool, it can add real value to existing care. Used as an authority, it introduces serious risk in a domain where errors matter. The technology is advancing faster than regulation, leaving users to navigate an unclear line.

FAQs

What is ChatGPT Health?

ChatGPT Health is a separate space inside ChatGPT designed for health-related questions with isolated chat history and enhanced privacy.

Can ChatGPT Health diagnose or treat medical conditions?

No. OpenAI states it is not intended for diagnosis or treatment and should not replace professional medical advice.

What personal data can users connect to ChatGPT Health?

Users can connect medical records, fitness data, nutrition apps, and lab insights to receive more contextual responses.

How private are conversations in ChatGPT Health?

Health chats are separated from regular chats and not used for training by default, but they are not covered by HIPAA.

What are the risks of using ChatGPT Health?

Misinterpretation, overreliance, and acting on advice without professional oversight can lead to real harm.